Inter TEP Communication: Unpacking One of The Latest Features In NSX-T 3.1

Making Tunnel Endpoints Simpler!

This post will include a brief recap of how Tunnel Endpoints and Transport VLANs have had to be configured for communication in prior releases of NSX-T. It will then focus on how the release of NSX-T 3.1 has changed the way Tunnel Endpoints for Edge Appliances that reside on NSX-T hosts can be configured.

Table of Contents

- What is a TEP?

- How they communicate: Both host to host and host to Edge Appliances

- How TEPs need to be configured when the Edge Appliances reside on a host transport node in releases prior to NSX-T 3.1

- A streamlined method to TEP configuration NSX-T 3.1

Note: All screenshots in this article have been taken in an NSX-T 3.1 environment.

What is a Tunnel Endpoint?

TEP's are a critical component of NSX-T, each hypervisor participating

in NSX-T (known as a host transport node) and Edge Appliance will have at

minimum one TEP IP address assigned to it. The host transport nodes and Edge Appliances may have two for load balancing traffic, this is generally

referred to as multi-TEP.

TEP IP Assignment:

- Host transport node TEP IP

addresses can be assigned to hosts statically, using DHCP or using

predefined IP Pools in NSX-T.

- Edge Appliances can have

their TEP IP addresses assigned statically or using a pool.

TEP's are the

source and destination IP addresses used in the IP packet for communication

between host transport nodes and Edge Appliances. Without correct design, you

will more than likely have communication issues between your host transport

nodes participating in NSX-T and your Edge Appliances, this will lead to the GENEVE tunnel between

endpoints being down. If your GENEVE tunnels are down you will NOT have

any communication between the endpoints on either side of the downed tunnel.

The below images show the mac address table for a logical switch on a host transport node.

Notice how here we have a column for Inner MAC, Outer MAC and Outer IP under the LCP section, also how the MAC addresses have been spread across the two TEP IPs. If we dig a little deeper, we can start by identifying what exactly those inner MACs are, where they came from and then repeat the same for the outer MAC.Let's start by identifying the logical switch in question.

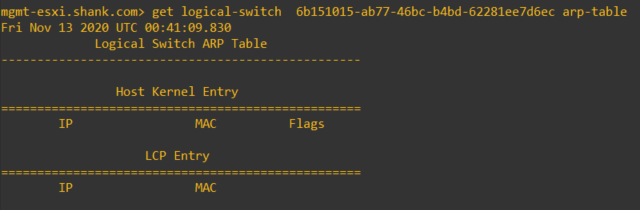

Now that we have the logical switch's name and UUID we can dig a little deeper.There are a couple of ways to do this, but since we have the name of the logical switch, I will continue to use nsxcli. The next command we can run is 'get logical-switch 6b151015-ab77-46bc-b4bd-62281ee7d6ec arp-table'. Change the UUID to suit your environment.

Wait a second.. why are there no entries, especially since we know there is workload on this segment. Chances are, either the Virtual Machine (VM) has not sent an Address Resolution Protocol (ARP) request or it had and the table has since been flushed. We can repopulate this table by simply initiating a ping from a VM on this segment.The below image shows the same table after I initiated a ping from a VM on the segment.

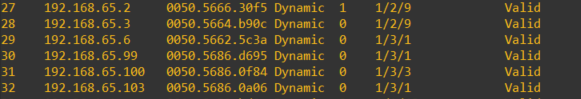

Excellent, now we have an entry in the table, if we scroll up to the first image you can now see that the inner MAC is the same as the MAC address from this entry. So that means the inner MAC is the source VM's vmnic's MAC address.That leaves us having to identify the remaining two outer MAC's, this is pretty easy to do. There are a couple of ways to do this, but let's continue with nsxcli and the logical switch we have been working with.

This time we can run 'get logical-switch 6b151015-ab77-46bc-b4bd-62281ee7d6ec vtep-table', you should see output similar to the below image.

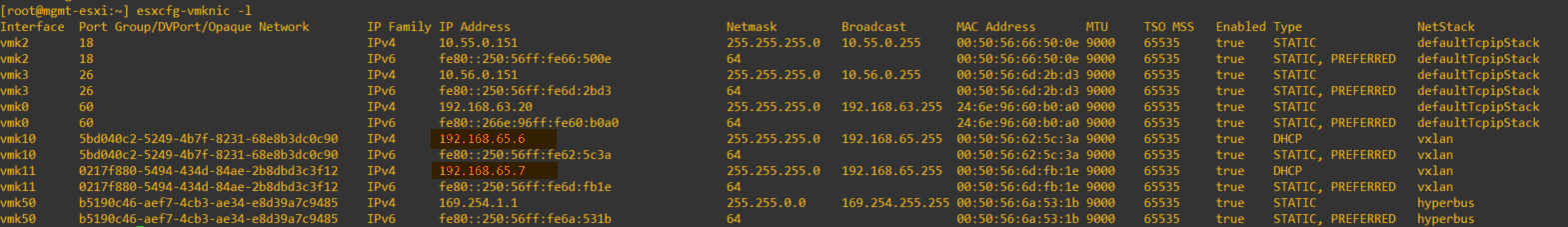

The above output may not be enough to determine which outer MAC belongs to each host transport node. To verify this, you can exit out of nsxcli, type in 'esxcfg-vmknic -l', and see the below output.

Here we can see the outer MAC's are the MAC addresses of the vmkernel nics used for NSX-T, vmk10 and vmk11. Therefore, we can deduce that payload from within the NSX-T domain is encapsulated in a packet header that uses the source and destination TEP IP and MAC addresses.

How TEPs Communicate?

NSX-T TEPs communicate with each other by using their source and destination addresses. The TEPs can be in Transport VLANs (different subnets) and still communicate, if this is part of your requirements then you must ensure those Transport VLANs (subnets) are routable and can communicate.

NSX-T TEPs use GENEVE encapsulation and communicate over UDP port 6081, as a bare minimum during the design of NSX-T for your environment you must ensure this port is open. Keep in mind this article covers the TEP networks (Transport VLANs), there are still other ports that need to be opened for NSX-T to function as intended, these ports are documented here VMware Ports: NSX-T Datacenter. You must also ensure that you have your MTU configured in your physical fabric with a minimum of 1600 bytes, however the VMware Validated Design recommends setting the MTU to 9000. This needs to be set end to end in your physical environment, that is, where ever you have NSX-T enabled hosts and edge appliances running. If this is not met, you will end up with packet fragmentation and this will likely cause your tunnels to remain down and/or other unwanted behaviour.

Below is an example of an IP packet that is being sent from one host transport node to the other using nsxcli on the host. You can perform a similar action in your environment by running 'start capture interface vmnic4 direction dual file capture expression srcip 192.168.65.7'

The example below is the same output from above, however outputting the capture to a file and opened in Wireshark for easier reading.

Once the packet is decapsulated the payload is exposed and actioned accordingly. This article focusses on TEPs and will not go into logical routing and switching of payload data.

Below is the ARP table from the top of rack switch, here you can see it has learnt the TEP IP addresses from the hosts, the MAC addresses listed are the MAC addresses of the hosts vmkernel ports or the edge appliances vmnic.

For those that like visuals, this is a quick diagram of how the hosts are wired and a topology of the lab that will be used in this article.

The above has demonstrated how hosts communicate via their TEP IP addresses, however this behaviour is the same for host to edge communication as well.

How TEPs need to be configured when Edge Appliances reside on a host transport node in prior releases NSX-T 3.1

In prior releases of NSX-T when deploying either a fully collapsed cluster (host/compute/edge) or compute and edge cluster, and you were running a single N-VDS, it was a requirement to have the NSX-T Edge TEPs (Transport VLAN) on a different subnet to the host transport nodes Transport VLAN. If you had more than 2 physical NICs and had a vDS for all management traffic and edges plumbed into it and NSX-T had a dedicated set of NICs for it's N-VDS you could utilize the same Transport VLAN for host transport node TEPs and edge appliances.

The IP packets had to be routed from the host transport nodes to the Edge appliance and vice versa, that is the GENEVE packets had to ingress/egress on a physical uplink from the host as there was no datapath within the host for the GENEVE tunnels.

If this did not occur, the packets were dropped and this was due to the fact that the packets are sent internally to the transport node with the same VLAN ID (Transport VLAN) unencapsulated and subsequently dropped.

Note: In NSX-T 3.1 inter TEP communication will not work if you use a vDS port group. In order for inter TEP communication to work in this release, you must use a VLAN-backed segment created in NSX-T.

The next set of images will show an example of the port group configuration and of two packet captures, one on a host transport node and the other on the Edge Appliance. In this scenario, the edge is configured with two vDS trunk portgroups and it's TEP IP addresses are in the same Transport VLAN as the host transport nodes.

This is a screenshot of the edge appliances TEP IP interfaces.

Host transport node with TEP IP's in the same range.

vCenter view showing the Edge appliances are in fact sitting on the management host which is prepared for NSX-T.

Now you should have a clearer picture of how the environment is set up. The next part of the article will show you that with this setup, inter TEP communication does not work, i.e. the Edge Appliance sits on a host transport node and both are on the same Transport VLAN, and the packet is not routed externally and brought back into the edge.

Provided below is a packet capture from the host transport node, here you can see a packet being sent from TEP IP 192.168.65.6 to 192.168.65.100 (Edge TEP IP).

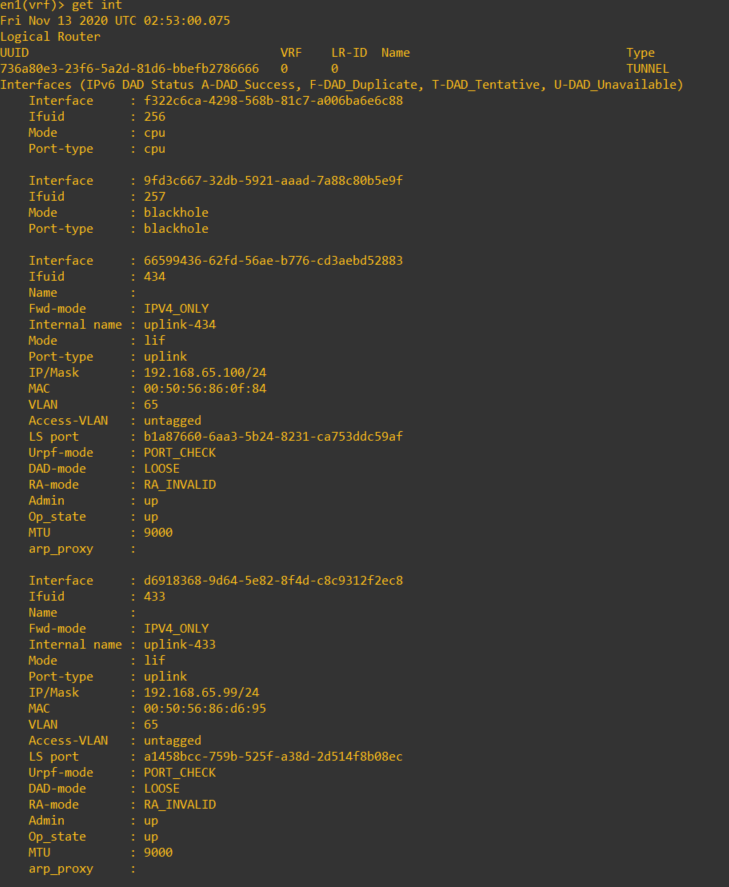

Provided below is another packet capture on the Edge appliance, if you want to single out a TEP interface, SSH into the appliance as admin, type in vrf 0 and then get interfaces. In the list, there is a field called interface. Copy the interface ID of the one you wish to run the capture on. The command to use is "start capture interface <id> direction dual file <filename>".

This is a WireShark view of the same to make reading easier.

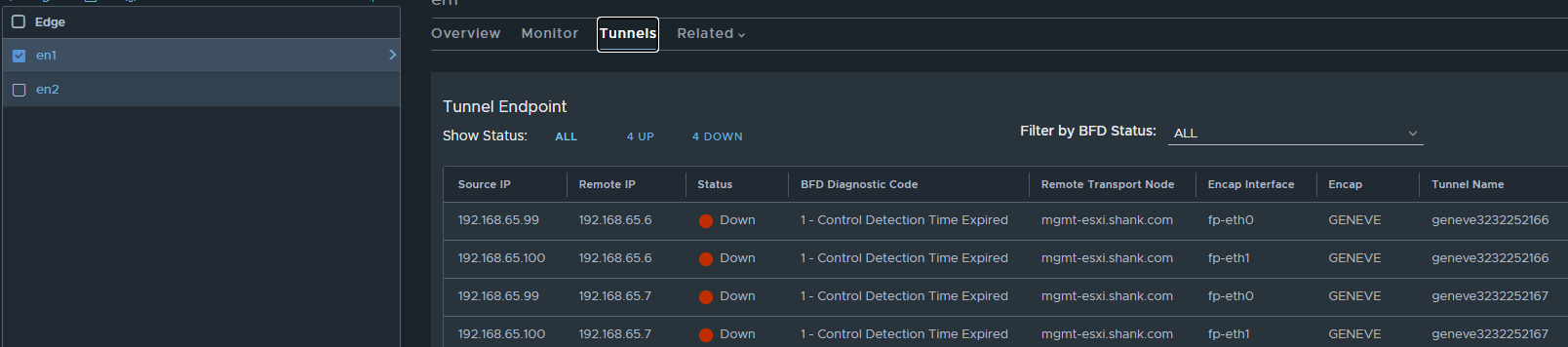

Here is what you will see in the NSX-T Manager UI.

Testing the same configuration with the Edge Appliances in a different Transport VLAN

I won't go into changing the uplink profile and updating the IP settings on the node itself, but in the below screenshot you can see the addresses for the TEP interface on en1 have changed from 192.168.65.x to 192.168.66.x.

Immediately the tunnels come up.

The below packet capture on Edge also shows the control messages are passing correctly now and that the packets are no longer being dropped.

In summary, to configure TEPs when Edge Appliances reside on a host transport node in release prior to NSX-T 3.1, ensure they are not on the same Transport VLAN. However, if they are, ensure they are not on the same vDS/N-VDS to have the GENEVE tunnel up.

Additionally, it has been demonstrated that inter TEP communication did not work in prior versions to NSX-T 3.1.

How has this changed in NSX-T 3.1

Inter TEP communication has been added in the latest release of NSX-T. This means you can have your edge TEPs and host transport node TEPs in the same Transport VLAN.

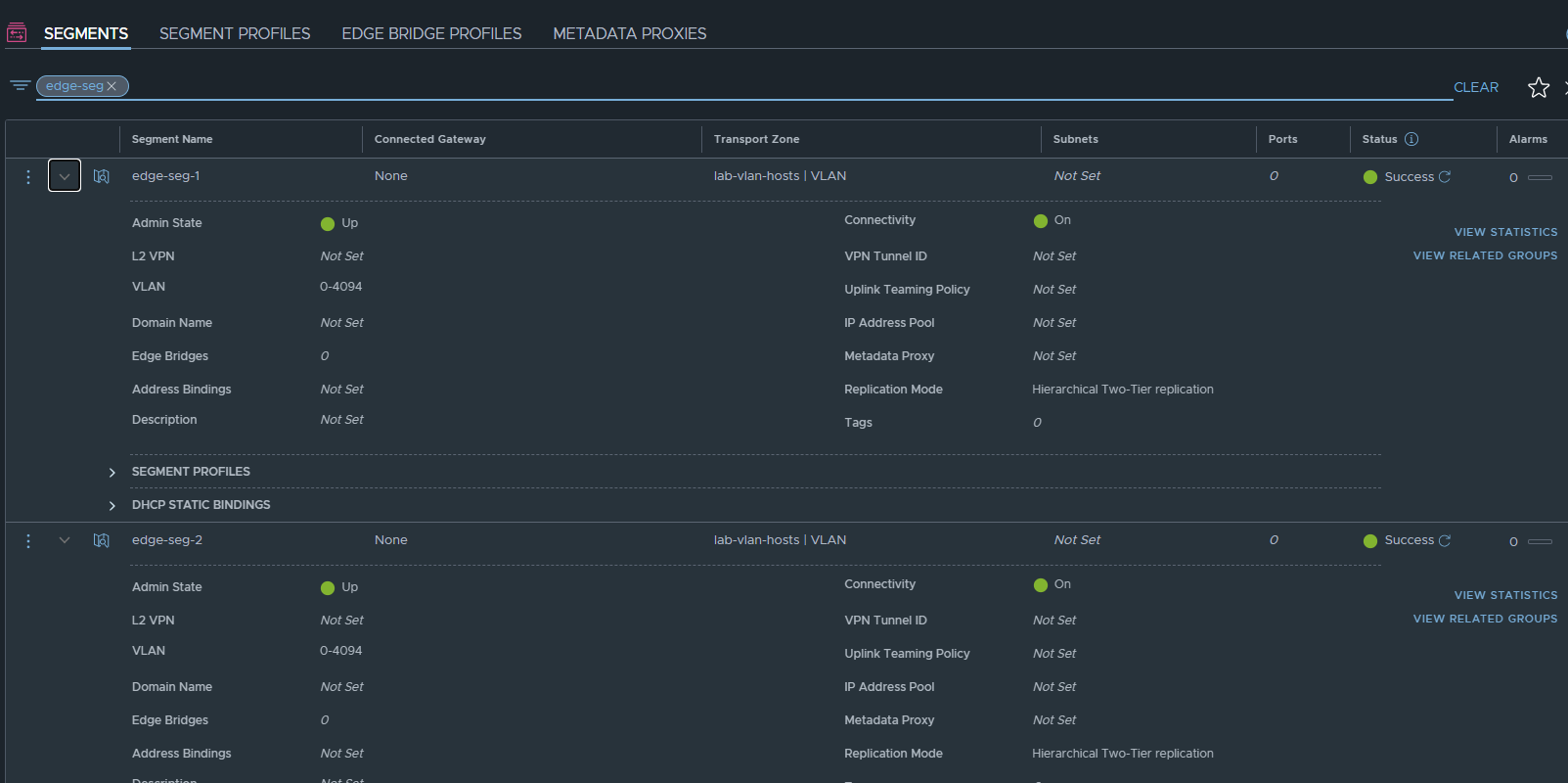

As we saw earlier in this post, even in NSX-T 3.1 when the edge TEPs are in the same Transport VLAN as the host TEPs and not on a separate vDS and set of NICs, inter TEP communication does not work. You may have also noticed, above the vDS portgroups for the edges there were two additional VLAN-backed segments created in NSX-T, they were labelled 'edge-seg-1' and 'edge-seg-2'.

The screenshot below shows their configuration in NSX-T.

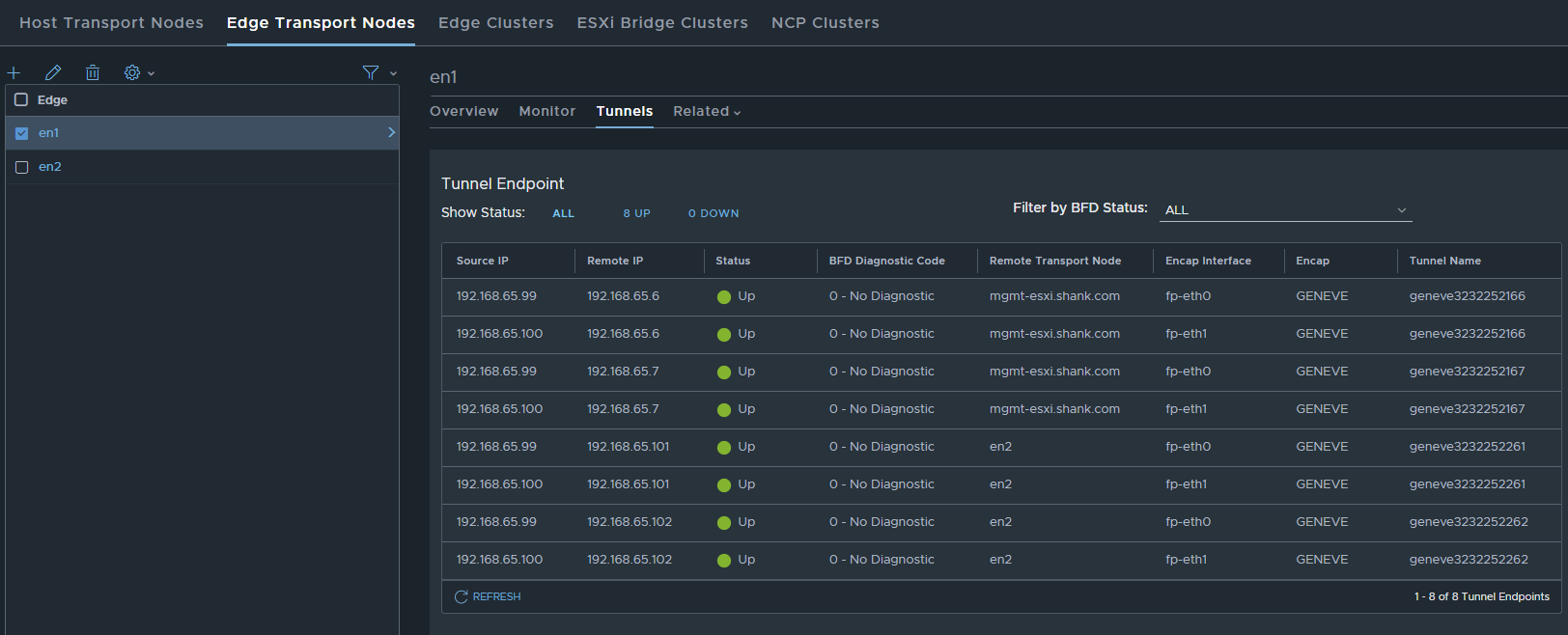

Next I will attach these segments as the uplinks for the edge transport node VM's and reconfigure the profiles to use the same Transport VLAN (65).

The below screenshot is of en1 and it's NIC configuration.

TEP interfaces on the edge appliance, showing the same IP range. And now if we go back into the UI, the tunnels are up. And here is a packet capture on the edge to show the BFD control messages coming through.

Conclusion

With the release of NSX-T 3.1, inter TEP communication is now possible. That means we are able to use the same Transport VLAN for host transport node TEP IP addresses and Edge appliances. The caveat is, you must use VLAN-Backed segments created in NSX-T for this communication to work. Inter TEP communication is not a must, you may still use different VLANs for TEP addressing, however this is been a feature that has been long awaited and will make some customers very happy!

Comments